Huge Opportunities in AI Models for Non-English Speakers

“Innovate with New Architecture”

[Interview with The Miilk] Pranav Mistry, CEO of Two Platforms

Pranav Mistry, the CEO of Two Platforms, is spearheading a revolution in AI architecture, distinguishing his company from existing AI giants. With the launch of the 'SUTRA' model, Mistry aims to serve the 6 billion non-English speakers around the world in countries like India and South Korea.

SUTRA promises performance akin to GPT-4 at a fraction of the cost, particularly excelling in languages other than English. The breakthrough lies in its dual transformer architecture, which separates conceptual learning from language processing.

Most AI models, including OpenAI's GPT-4, rely heavily on English data, making them proficient in English but less effective in other languages. "Non-English speakers, who comprise 80% of the global population, struggle to access generative AI technologies," Mistry shared in an exclusive interview at Two Platforms' Silicon Valley headquarters.

Mistry, renowned for his TED appearances and innovations at Samsung Electronics, including the Galaxy Gear and the digital human NEON, founded Two Platforms in 2021. The company has since attracted seed investment from South Korea's Naver and India's Reliance Jio, underscoring the potential of its AI technology.

A Different Approach: Introducing SUTRA

Unlike many startups that fine-tune existing open-source models or rely on APIs from major AI models, Two Platforms developed a completely new architecture for SUTRA. This innovation stems from Mistry's realization that English-centric models like GPT and Meta’s Llama models do not address the needs of the 6 billion non-English speakers.

Mistry's own experience as a native Gujarati speaker inspired this approach. "Current AI models are not designed to support multilingual capabilities effectively, disadvantaging non-English-speaking countries," he explained. Languages like Hindi, Arabic, Bengali, and Japanese, which each have hundreds of millions of speakers, are underrepresented in AI training data, resulting in poorer quality and higher costs.

For enterprise applications, the cost of using English-based models is significantly higher for Non-English languages that require five to eight times more tokens than English, driving up costs. To address this, Two Platforms launched SUTRA, which aims to reduce linguistic inequality and improve performance.

Cost-Effective and High Performance

Could industry leaders like OpenAI resolve these performance and cost issues? Despite OpenAI's cost reductions with GPT-4o, Mistry believes there are inherent architectural limitations. SUTRA, optimized for multilingual use, has a competitive edge in non-English markets.

Mistry provided specific data: GPT-4o's output cost per million tokens is $15, while SUTRA's is only $1. For non-English languages, the efficiency advantange is even higher as SUTRA requires fewer tokens to generate responses.

For instance, SUTRA generated only 19 tokens for the sentence in Hindi, "विश्व कप में ऑस्ट्रेलिया के खिलाफ भारत की हार के बाद भारतीय क्रिकेट प्रशंसक काफी निराश थे(The Indian cricket fans were very disappointed after India's loss against Australia in the World Cup)" compared to GPT-4's 82 tokens.

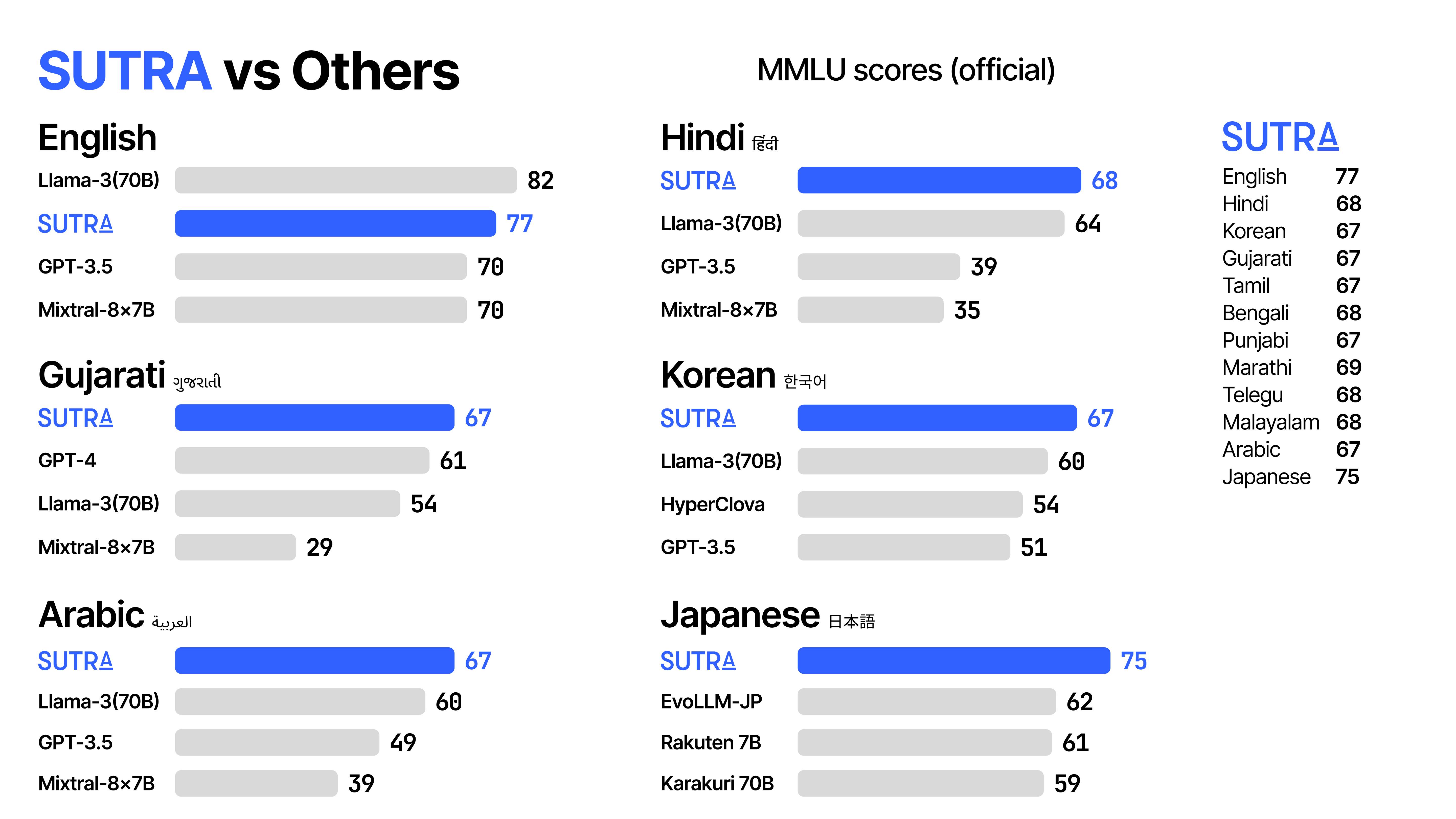

SUTRA supports over 50 languages and excels in multilingual benchmarks. For example, in the MMLU Korean score, SUTRA scored 67, close to GPT-4's 72, and significantly better than HyperClova's 54 and GPT-3.5's 51. In Gujarati, SUTRA even outperformed GPT-4.

The Dual Transformer Advantage

The secret to SUTRA's success lies in its dual-transformer architecture, which decouples core conceptual understanding from language-specific processing. This innovation allows SUTRA to operate efficiently across multiple languages without extensive retraining. It's akin to how humans learn new languages.

SUTRA's tokenizer, trained with balanced multilingual data, reduces overall tokens by 80% to 200%, enabling efficient language processing. "In markets like India, the cost of using GPT-4 for non-English languages is prohibitive," Mistry noted. "SUTRA supports 50 languages and is one of the most cost-effective options"

Real-Time Learning and Global Adoption

SUTRA’s ‘online’ model continuously learns from real-time data, reducing hallucinations and enhancing accuracy. For example, SUTRA's AI search app, Geniya, provides real-time sports scores.

Major global companies like Reliance Jio are using SUTRA for multilingual customer support, and several South Korean enterprises are considering adoption due to its cost-effectiveness and responsiveness.

Two Platforms is also accumulating non-English language data through AI social apps like ZAPPY and Geniya. Since its launch, ZAPPY has generated millions of AI messages and attracted over 400,000 subscribers.

Keynote at The Wave Conference: "Question and Challenge"

Pranav Mistry will deliver a keynote at The Wave conference in Seoul, addressing the need for multilingual AI models and the importance of preserving linguistic diversity.

He believes the current era allows even small startups to challenge the status quo with smarter approaches and innovative architectures.

"In the AI era, you don't need thousands of people. You need smarter approaches, new architectures, and innovative thinking. What we've started is a new innovation," Mistry concluded.